Deploying and approving your model

The next step is to deploy your model, and then approve it so that it can generate predictions.

Deploying a model

Deploying a model allows you to use it to generate predictions on new data.

The model refinement process is different for each project you work on. Once you have a model that meets the criteria for your use case, you can deploy it. This will create an ML deployment, which is available in the catalog.

For more information about deploying your models in Qlik Predict, see Working with ML deployments.

Do the following:

-

Switch back to the Models tab in the experiment.

-

Click the

Best model card above the model metrics table to select the best overall model.

-

Click

next to the model that has a

icon next to it.

-

Click

Deploy.

-

Select the option to deploy the model to A new deployment.

-

Type a name for your deployment, such as Customer churn deployment.

-

If needed, adjust the space, description, and tags.

-

Click Deploy.

Deploying a model in Qlik Predict

Your new ML deployment will now be available in the catalog.

Click Open, or navigate back to the catalog and open the ML deployment.

Approving your model

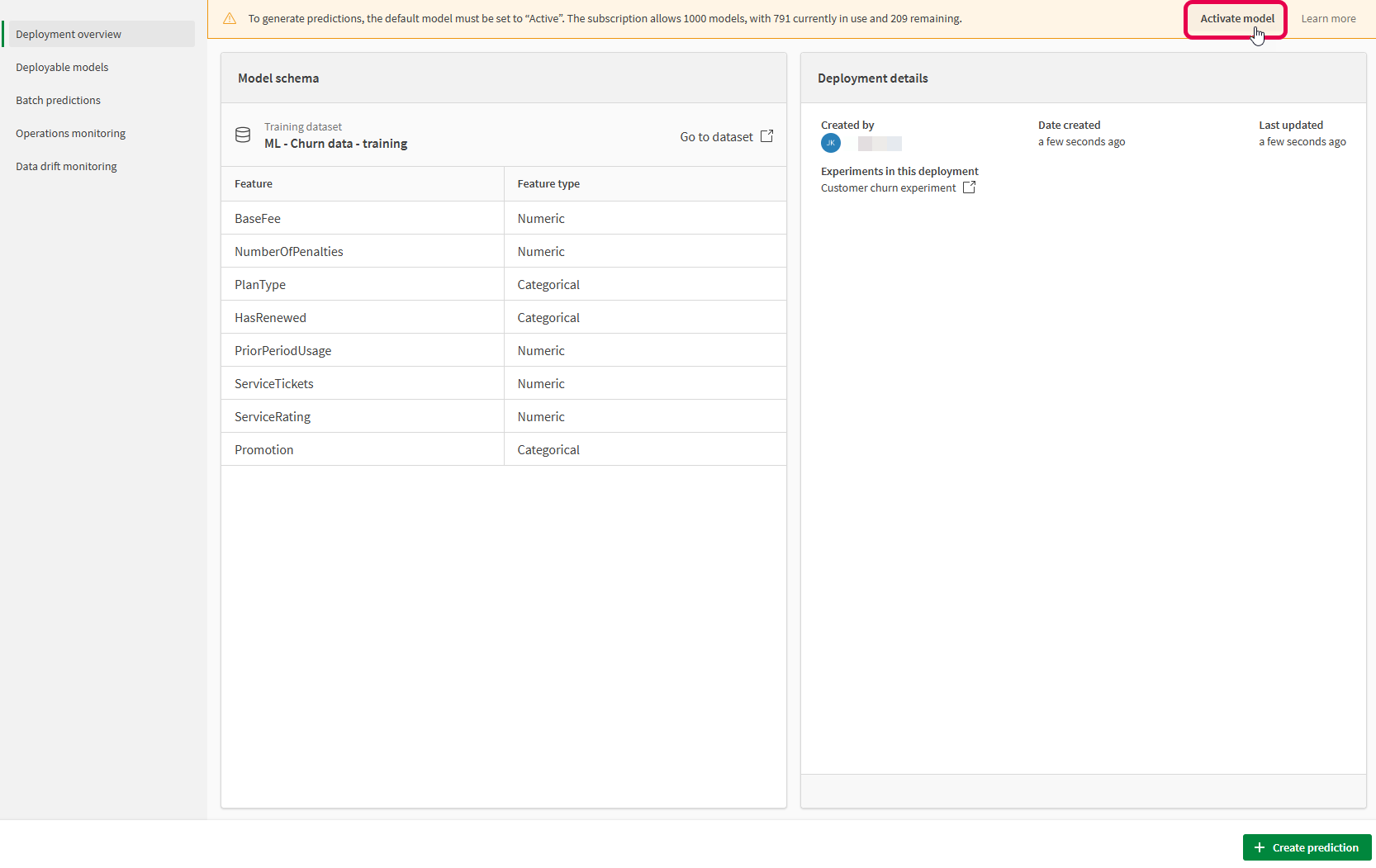

At the top of the ML deployment interface, a banner indicates that a model approver needs to activate the default model for making predictions.

Do the following:

-

In the banner at the top of the ML deployment, click Activate model.

-

In the dialog that opens, click Activate model to confirm.

Approving a deployed model in an ML deployment

For more information about approving models, see Approving deployed models.

You can now proceed to creating predictions with your ML deployment. Move to the next topic in this tutorial.