Apache Parquet is a columnar storage format, highly efficient for storing and querying large datasets. In Qlik Sense, you can read data from Parquet files, and store tables as Parquet files.

Parquet allows for efficient querying of specific columns in a table rather than reading the entire table. This makes it well-suited for use with big data processing. Also, Parquet supports efficient compression and encoding of data. This can further reduce storage space and improve query performance.

Creating Parquet files

You can create Parquet files using the Store command in the script. State in the script that a previously-read table, or part thereof, is to be exported to an explicitly-named file at a location of your choice. You can nest the data your store in data in Parquet files.

For more information, see Store.

Reading data from Parquet files

You can read data from a Parquet file just like any other data file supported by Qlik Sense. This includes Data manager, Data load editor, or when you add data to a new app.

For more information, see Loading data from files.

You can also load data from a Parquet file in the data load script with the LOAD command. For example:

For more information, see Load.

Loading data from nested Parquet files

If a Parquet file contains nested data, it needs to be loaded in multiple load statements, each specifying which subset should be loaded into each table. The Table is specifier is used to prove a path to the group node in the schema to be loaded.

You can use the specifer Delimiter is to specify how nodes should be combined. A LOAD statement loading a file with nested data that does not include delimiter is uses that value specified in by the script variable FieldNameDelimiter. If no delimiter is supplied and FieldNameDelimiter is not set, then the default delimiter will be '.'.

Only nodes that match the Table is path are loaded.

Data node are loaded into a file without nesting.

Group nodes will group the fields in the group by adding their name to the field name. For example, a group with field1 and field2 are loaded as group.field1 and group.field2.

List nodes will generate key fields that are used to link the tables. For example, %Key_group.list. Any group or data node inside the list need to be loaded in a separate load statement. A key field to the parent list will also be added.

The following examples shows the same nested Parquet file, created in the example in Storing nested data in Parquet files, loaded into an app, using Data manager and Data load editor (with the default scripting from Select data and custom scripting).

Example: Data manager

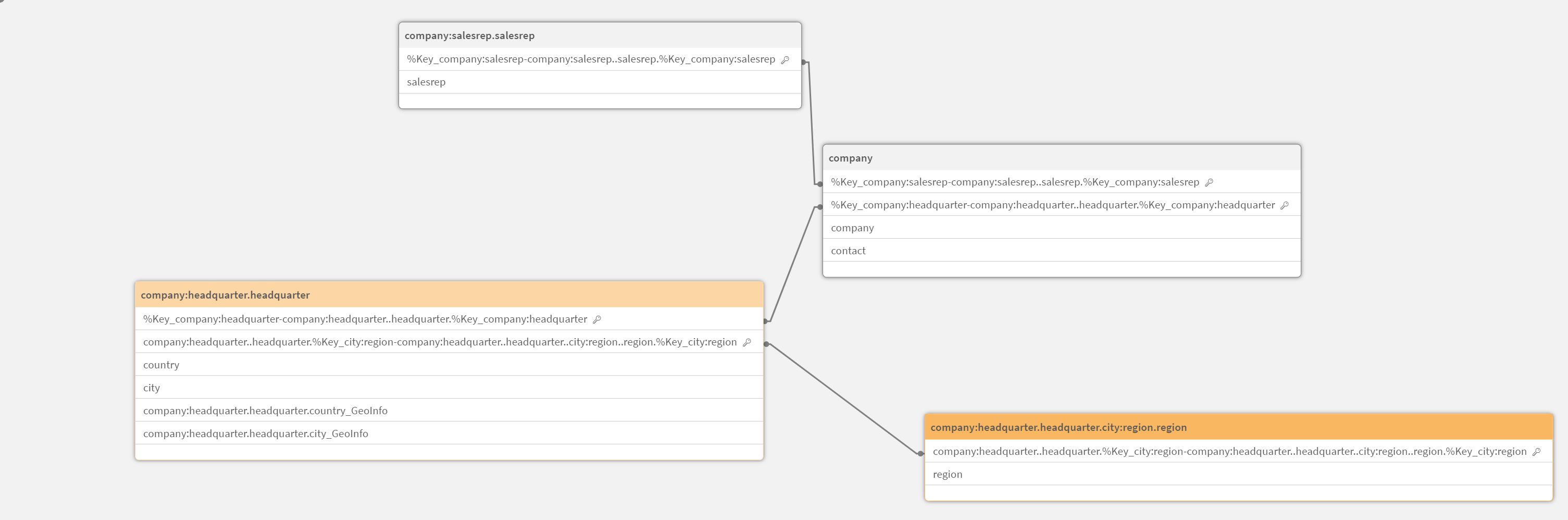

If you load customer.parquet in Data manager and apply all recommended associations, you end up with the following data model:

Data model for loading company.parquet with Data manager

Example: Data load editor (Select data)

If you load the data using Select data in Data load editor, you end up with the following script:

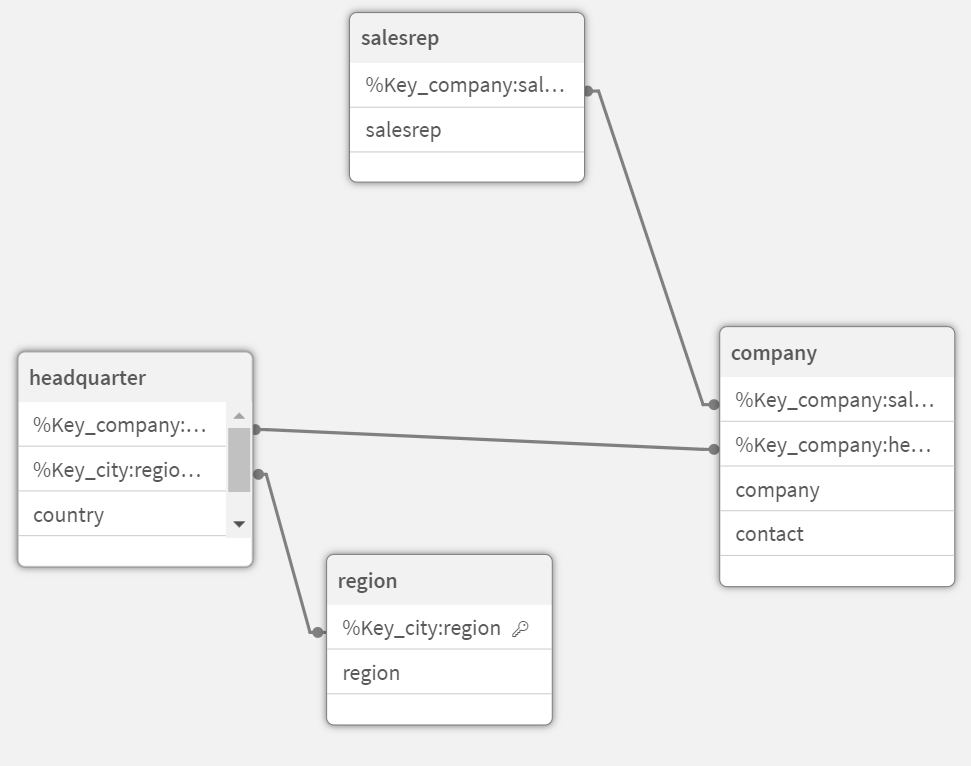

The data model looks like this in Data model viewer.

Data model for loading company.parquet with Select data in Data load editor

Example: Data load editor (Custom load script)

If you use a custom load script, you have more control over how the fields and tables are loaded from customer.parquet. The following load script loads the tables and fields from company.parquet:

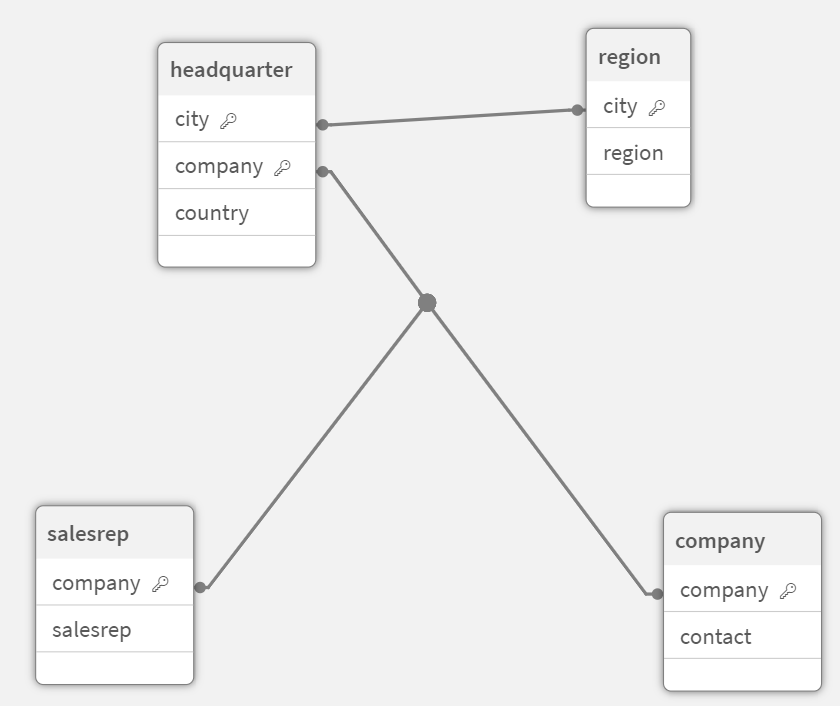

This results in the following data model, which is identical to the original data model before the data was stored in the Parquet file.

Data model for loading company.parquet with a custom script in Data load editor

Limitations

Parquet files have the following limitations:

-

Parquet files that contain an int96 timestamp field may not be loaded correctly.

Int96 is a deprecated data type that contains a timestamp without timezone information. An attempt will be made to read the field as UTC, but as there are different vendor implementations there is no guarantee for success.

Verify the loaded data and adjust it to the correct timezone with an offset if required.